Nicole Sandra-Yaffa Dumont

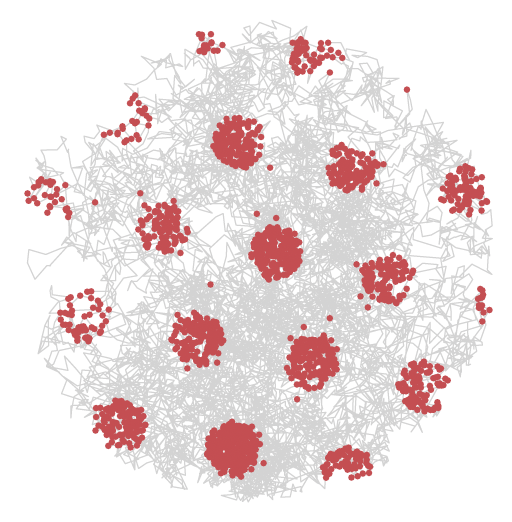

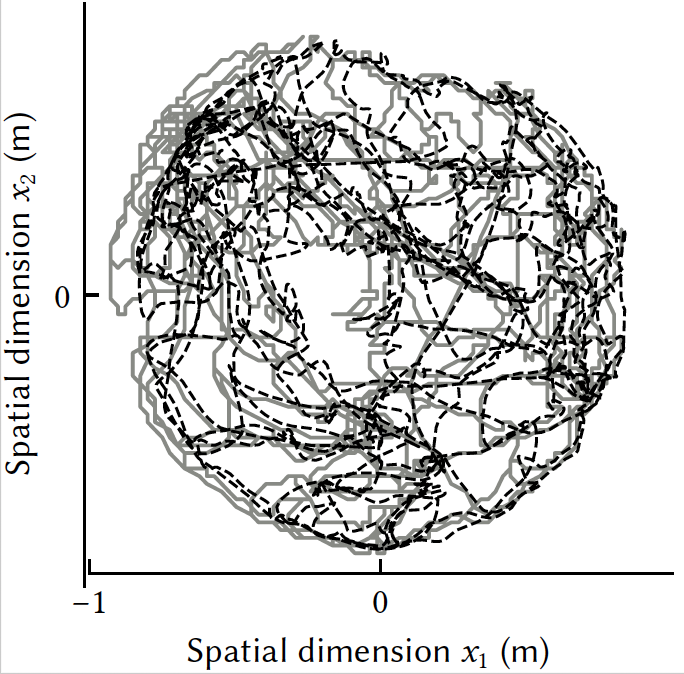

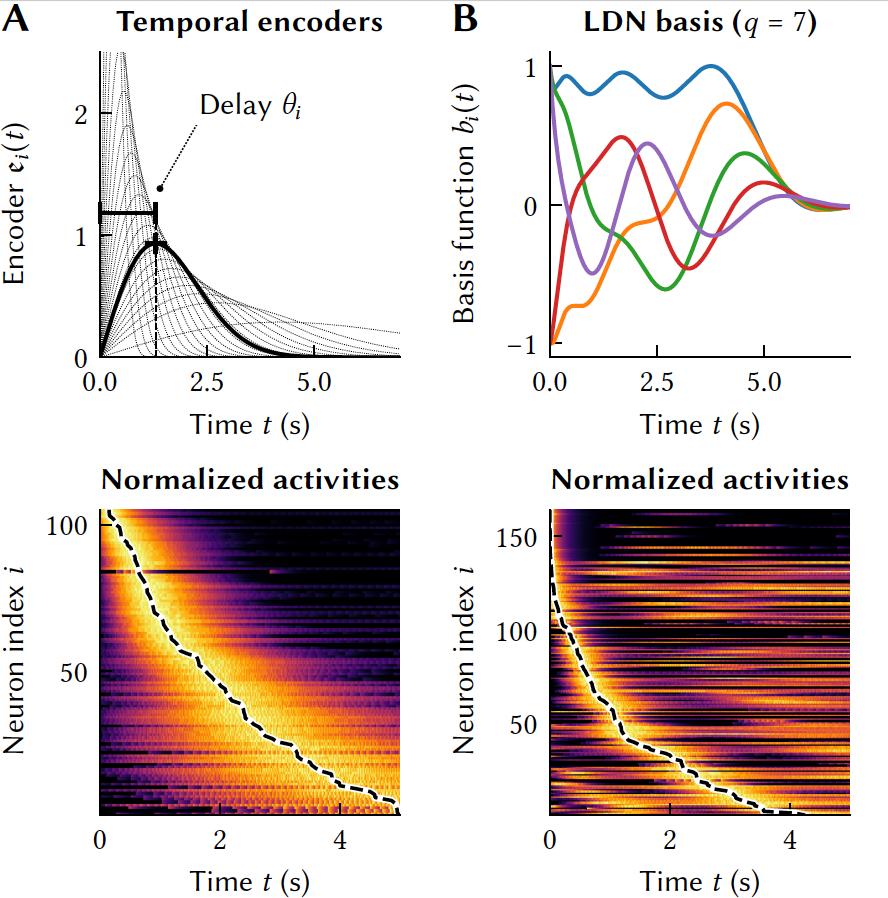

Hello! I am a postdoctoral researcher at the Institute of Neuroinformatics (INI, UZH & ETH Zürich). I am currently working on models of neural circuits underlying uncertainty in perception and learning with Prof. Katharina Wilmes (Theory of Neural Circuits Group). I previously completed my PhD in Computer Science at the University of Waterloo. I was advised by Prof. Chris Eliasmith (Computational Neuroscience Research Group) and Prof. Jeff Orchard (Neurocognitive Computing Lab), whose labs are affiliated with the Centre for Theoretical Neuroscience. My thesis, Symbols, Dynamics, and Maps: A Neurosymbolic Approach to Spatial Cognition, was about modelling spatial cognition in the brain with neurosymbolic methods and spiking neural networks.

I obtained a M.Math in the computational mathematics program at the University of Waterloo, where I researched continuous optimization and was advised by Thomas Coleman. I obtained my B.Sc. in Mathematics and Physics from McMaster University.

news

| Dec 01, 2025 | I started a postdoc at the Institute of Neuroinformatics (INI) in Zurich |

|---|---|

| Feb 22, 2025 | Applications are open for the 2025 Telluride Neuromorphic Cognition Engineering Workshop |

| Jan 14, 2025 | I successfully defended my PhD! |

| Jan 02, 2025 | I will defend my PhD dissertation on January 13. Click here for more information. |

| Oct 16, 2024 | Best paper award at ICANN |